The internet is entering a strange new era.

Videos look real - but aren’t.

Voices sound authentic - but were never spoken.

Photos capture moments that never happened.

AI has crossed a line where seeing is no longer believing. And that forces a hard question:

How do we prove what is real?

One of the strongest answers emerging today is a standard called C2PA - and when combined with cryptography and blockchain verification, it may become the foundation for trust in digital media.

AI-generated content is not inherently bad. It’s powerful, creative, and useful. The problem is indistinguishability.

Labels, disclaimers, and platform warnings help - but they are social solutions, not technical ones. And social solutions are easy to bypass.

To fight AI deception at scale, we need cryptographic proof, not promises.

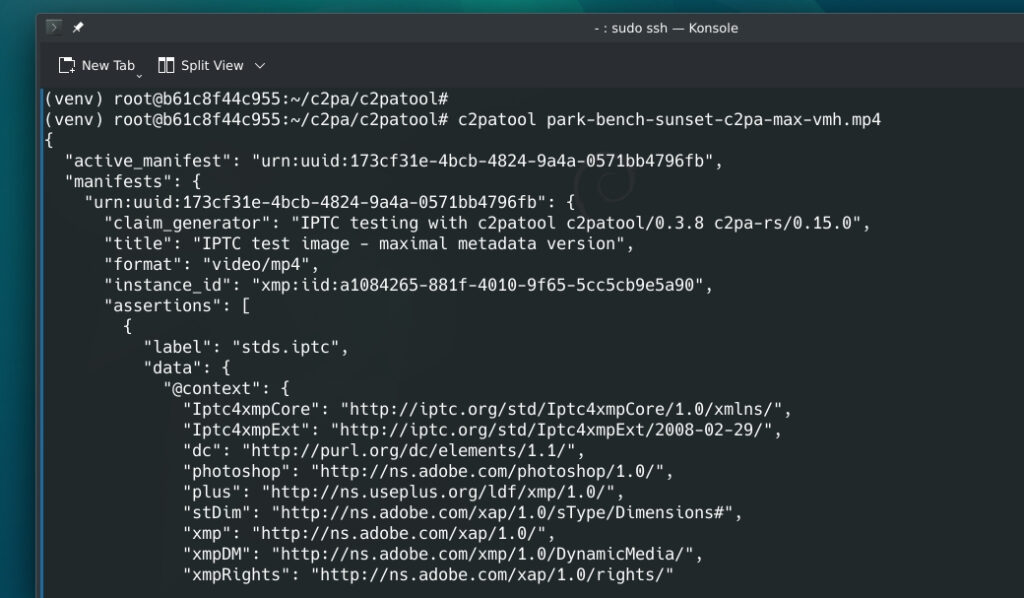

C2PA (Coalition for Content Provenance and Authenticity) is an open technical standard designed to answer one simple question:

Where did this piece of content come from - and can we trust it?

C2PA allows photos and videos to carry verifiable proof of origin and history, embedded directly into the file.

Think of it as:

The C2PA standard relies on public key infrastructure (PKI) - the foundational framework of technologies, protocols, and policies that secures internet communications and verifies identities - to enable consumers and software to validate media files reliably. C2PA achieves this through cryptographic algorithms, content credentials (based on X.509 certificates and public-private key pairs), and a trust list that together verify a file's origin and any edits. For a simple example, consider taking a photo with a C2PA-enabled device. As soon as the image is captured, provenance metadata is automatically added, creating a secure, tamper-evident record that includes:

This establishes a transparent chain of provenance right from the moment of creation.

Many ideas have been proposed to fight fake content. C2PA stands out because it checks all the hard boxes:

C2PA is backed by:

Major media organizations and camera manufacturers

This matters because standards only work if everyone adopts them - from cameras to apps to platforms.

C2PA can sign content at the moment it’s created, not afterward.

That’s critical, because provenance added later is far easier to fake.

It’s not owned by one company. Anyone can verify C2PA-signed content without trusting a single platform.

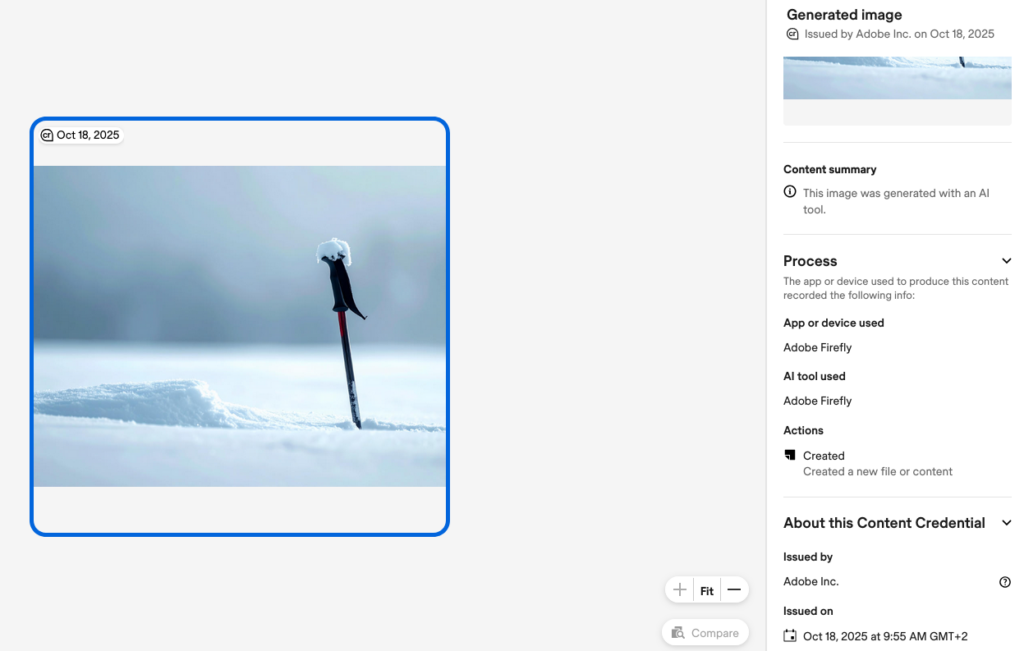

C2PA doesn’t try to ban AI - it requires honest disclosure when AI is used.

C2PA does not magically analyze pixels to detect AI.

Instead, it provides something stronger:

A cryptographic record of how the content was created

AI-generated content can be signed - but it must declare itself as AI-generated.

This shifts the problem from guessing to verifiable disclosure.

This is where blockchain can play a powerful role - if used correctly.

What blockchain is good at

Blockchain excels at:

That makes it ideal for storing hashes, not large media files.

The correct approach

Instead of storing videos on-chain (which is inefficient), the system should:

This creates:

What blockchain does NOT do

Blockchain does not:

It anchors trust, it doesn’t create it alone.

AI can fake images.

AI can fake voices.

AI can fake faces.

What it cannot fake (without breaking math) is cryptography.

Modern cryptographic systems rely on:

Breaking these systems would require:

AI can imitate reality - but it cannot forge valid cryptographic signatures without the private keys.

With growing concerns around AI, initiatives like C2PA, driven by collaboration among industry leaders, show that with the right tools, we can manage these powerful technologies and protect the authenticity of our digital experiences.

Useful URLs: